When it comes to improving the performance of database queries one of the most important areas to look at is Indexes. Proper indexes can actually lead to queries running hundreds if not thousands of times faster. Despite this, indexes are often neglected by software developers. In this article I will talk about the basics of using indexes with Microsoft SQL Server.

First, let’s talk about what an index is. An index on a database table is actually a lot like the index in the back of a book. If a book didn’t have an index and I needed to find every occurrence of a certain word, I would have to read every page in the book to find them. When the book does have an index, I can quickly scan through the index and find the word I am looking for which will point me to where to find it in the book. Since the index is in alphabetical order, I don’t even need to read the whole thing, I can quickly jump to the page that has the word I am looking for.

When you create an index on a table, the server will store the indexed field or fields in alphabetical/numeric order so that any value in those fields can be rapidly found in the index. Let’s take a look at some queries and how they can be improved with indexes.

I have included a script along with this article that will create a very simple example of a sales order header table and will populate it with some semi-random data. Simply run this script on a test database and it will create a table called SalesOrder and fill it with data.

To analyze the performance of the queries we will use two tools within SQL Server Management Studio. First, before executing each query we will run the command “set statistics io on” which will show how many reads the server does from each table in the query. After the query we will run “set statistics io off” to turn this off. The second tool is the Execution Plan. You turn this on by selecting Include Actual Execution Plan from the Query menu before you run the query. We will talk about how to use these two tools in more detail when we execute our first query.

Let’s start out with this query…

set statistics io on

select OrderNumber from SalesOrder where orderNumber = '10017'

set statistics io off

Run this query, then click on the Messages tab to look at the statistics, you will see something like this:

Table 'SalesOrder'. Scan count 1, logical reads 59, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

The two values we want to focus on are logical reads and physical reads. All data in SQL Server is stored in 8K pages, so these values are the number of pages that were read during the execution of the query. A physical read means that the page was read from disk. Once a page is read from disk it can be cached in memory in case it is needed again. Reading a cached page would be a logical read. This query did 59 logical reads, but if the data had not been accessed recently these could have been physical reads. Either way we want to try to minimize this number. Our data records are pretty small so there are going to be a lot of records in each page in this case, so you can see that 59 reads means it had to read a lot of records to get the one we were looking for.

To understand why this happened let’s look at the Execution Plan tab. If you don’t see this tab be sure “Include Actual Execution Plan” is selected on the Query menu.

This shows that to execute the query the server did a Table Scan which means I had to read every row in the table to find the one we are looking for. It doesn’t know that OrderNumber is unique so it doesn’t stop even when it finds the record because it assumes that there might be more than one record with the same order number.

Now let’s create an index that will make this query more efficient. This query will create an index on the SalesOrder table for the OrderNumber column. Don’t worry about the NONCLUSTERED keyword, we will talk about this later.

CREATE NONCLUSTERED INDEX IX_SalesOrder ON dbo.SalesOrder ( OrderNumber )

With the index created let’s re-run the original query and look at the results. Here is the statistics output:

Table 'SalesOrder'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

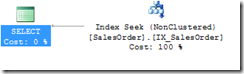

You can see that the number of reads has dropped from 59 down to only 2 which is a considerable improvement. Let’s look at the execution plan:

The Table Scan has changed to an Index Seek. Index Seeks are the best case for a query. This means it’s using the index to rapidly find the record(s) we are looking for.

So far we have only been returning the OrderNumber, so now let’s return both the OrderNumber and the Customer:

set statistics io on

select OrderNumber,Customer from SalesOrder

where orderNumber = '10017'

set statistics io off

If you look at the statistics you will see it has gone up from two reads to three. Let’s look at the execution plan to see why.

The query still uses the Index Seek but we now have a second step which is a Lookup. To understand this you need to understand how SQL Server stores indexes. When a NONCLUSTERED index is created the values in the field being indexed are copied to another part of the database in sorted order. Each of these index records also has a pointer back to the original data which is where all the fields are stored. Since the index and the data are stored in different pages in the database, once the value we are looking for is found in the index, the server must read the corresponding data page to get the customer field. This lookup isn’t a big issue for this query since we only have one record to lookup, but it would become a bigger problem if we were returning multiple records. One way we could fix this is to add the Customer field to the index.

drop INDEX IX_SalesOrder ON dbo.SalesOrder

CREATE NONCLUSTERED INDEX IX_SalesOrder_1 ON dbo.SalesOrder

(

OrderNumber,

Customer

)

This query drops and re-creates the index so it includes both the OrderNumber and Customer. If you re-run the previous query you will see that the lookup goes away. The server uses the index to find the order you are looking for, and since the customer is part of the index it no longer needs to lookup the data page to get the customer field value.

Now that we have Customer as part of an index, let’s try to run a query on it.

select OrderNumber from SalesOrder

where Customer = '30075'

Here is the execution plan:

Notice that we are now getting an Index Scan, instead of a Seek. When you create an index with multiple fields the index record is created by appending the two fields together in the order you specified them when you created the index. So if a record has a customer of 30075 and an order number 10048, the index essentially stores “1004830075”. Since the index is sorted by the order number first, it cannot use an Index Seek to find the customer number, but it can scan through all the index records to find the customer. The index Scan is preferable to a Table Scan because an index record will usually be much smaller than the table record, so there are a lot more records per page which results in fewer page reads. This query required 40 page reads instead of the 59 that was required for the whole table. This difference would get even larger if there were more fields in the table.

Now let’s create an index on the customer:

CREATE NONCLUSTERED INDEX IX_SalesOrder_Cust ON dbo.SalesOrder

(

Customer

)

and then run a query that returns all the order numbers for a specific customer:

select OrderNumber from SalesOrder

where Customer = '30075'

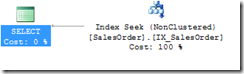

If you look at the execution plan you will notice something odd, it’s ignoring the index and still doing an index scan:

![clip_image005[1] clip_image005[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgTBeE5cULDP1yoeHi2CUmAO_15hW_J3mSU9Dr5FDHOP10LLr2LpY4A211Q4V9J8-GuVxJib2oqCdLSJgtw1xmg9pY5f2ILnj0BFpmsF_f-ADhD4QMHT6HGkw7AFT3gz-G7VQ6iXL0d_fvH/?imgmax=800)

The reason this happens is that when SQL Server generates an execution plan it not only considers the structure of the table and indexes, but also looks at statistics about the data in the index. The details of Statistics are outside the scope of this article, but for the purpose of this query I will say that the statistics for the Customer index will tell SQL that customer numbers often appear multiple times in the table. Since the server needs to do a lookup to get the order number for each record, it assumes that it will just be faster to scan the order index, then it would be to use the customer index and do the lookups. I can re-run the query and force SQL Server to use the customer index like this:

set statistics io on

select OrderNumber from SalesOrder with (index(IX_SalesOrder_Cust))

where Customer = '30075'

set statistics io off

The version of the query that did an index scan had to do 40 reads, but when I force this query to use the customer index it required 92 reads, so in this case the server guessed correctly that the table scan is more efficient. The customer number in the test data is randomly generated so using the index will not always have been less efficient. If you happen to use a customer number that only has one or two orders, then the index version would be faster. SQL Server is making a best guess at what is most efficient, and even though certain executions of the query may end up being less efficient, in the long run SQL’s guess will likely result it better query performance.

To get SQL to use the index in this case we could do what we did with the order number index, and add the customer to it, but since we already have an index on the order number it’s probably not worthwhile to have the order number as part of the customer index, so there is a second option for handling this:

drop index [IX_SalesOrder_Cust] ON [dbo].[SalesOrder]

CREATE NONCLUSTERED INDEX [IX_SalesOrder_Cust] ON [dbo].[SalesOrder]

(

[Customer] ASC

)

INCLUDE ([OrderNumber])

In this index I have added the INCLUDE clause which adds the OrderNumber to the index, but doesn’t actually make it part of the indexed field. Now SQL can use this index without having to do a lookup to get the OrderNumber.

You might wonder why you would use INCLUDE instead of just making the field part of the index? If you are not going to use the field in a WHERE clause, then having it as an INCLUDE is more efficient since the server doesn’t need to consider the value when updating the index which helps improve the performance of inserts, updates and deletes. Another advantage is that you can INCLUDE type of fields which cannot be indexed, Text fields for example.

So far we have been limiting the fields we return in the select, so let’s trying retrieving all the fields:

select * from SalesOrder

where Customer = '30075'

This will once again result in a table scan. Even though we have an index on Customer, and SalesOrder is included in the index it would still need to do a lookup to get the rest of the fields, so it decides that a table scan would be more efficient. We can solve this issue by using a Clustered Index.

So far all the indexes we have built have been non-clustered indexes. As I explained earlier a non-clustered index stores the indexed fields in another part of the database with a pointer back to the actual data. By contrast, a clustered index stores the actual data records in the index order so no matter what fields we are selecting the lookup is not needed since the index and data are all in the same place. Since this index controls the order of the data, there can only be one clustered index per table because the table can only be sorted one way.

Let’s drop the original customer index and re-create it as clustered. The include is no longer needed since a clustered index effectively has all the fields included.

drop index [IX_SalesOrder_Cust] ON [dbo].[SalesOrder]

CREATE CLUSTERED INDEX IX_SalesOrder_Cust ON dbo.SalesOrder

(

Customer

)

Now if you re-run the query it will do an index seek.

Now let’s try this query.

set statistics io on

select * from SalesOrder

where orderDate = '1/1/2015'

set statistics io off

Because every field is part of the clustered index, the execution plan will show that it did a clustered index scan. The statistics on this query show 66 reads which is 2 more reads then a table scan. I have read that due to the way the data is organized that the scan is actually a little more efficient then a table scan but index scans on a non-clustered index will normally be much more efficient then on a clustered index.

Everything I have shown here so far is for conditions in the WHERE clause, but this all applies to joins also, just think of the ON part of the join as being a WHERE. These indexes will also come into play when you do updates or deletes with a WHERE clause.

![clip_image005[1] clip_image005[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgTBeE5cULDP1yoeHi2CUmAO_15hW_J3mSU9Dr5FDHOP10LLr2LpY4A211Q4V9J8-GuVxJib2oqCdLSJgtw1xmg9pY5f2ILnj0BFpmsF_f-ADhD4QMHT6HGkw7AFT3gz-G7VQ6iXL0d_fvH/?imgmax=800)